Categories

Latest Posts

Skai Director of Sales joins Podean as Director of Retail Media Partnerships

September 12, 2023

Podean Turkey launches & unveils ecommerce research

August 5, 2022

We are seeing a massive shift toward voice controlled AI technology as companies aim to provide a more perceptive and user-centric experience for the consumer, but what exactly does this mean for the future of consumer technology?

Industry experts are predicting that within the next five years almost every application will integrate voice technology in some way. Already, we’re seeing estimations that one in six Americans owns a smart speaker like Alexa, and there is further evidence to predict by 2020 nearly 100 million smartphone users will be using voice assistants. The millennial era seems to be the driving force behind this change. Studies show that there is an increased level of comfort in interacting with these products, there is an increased awareness of their voice-controlled properties and a demonstrated ease of use among this generation. As a response to this sizeable change in user demand, many new technologies are opting to adapt voice controlled AI.

Recently, Both Amazon and Google have also announced that their voice controlled AI assistants will no longer necessitate the use of their “wake” word to be repeated. In the past, these devices required a wake word to initiate a new line of conversation; for instance, Alexa required you to say her name while Google home required you to say “Okay, Google” before the devices would pick up on auditory requests. Now, after initiating the conversation with these devices, consumers can ask follow up questions without having to repeat the needed “wake” word to yield a response.

Already, Alexa is able to perform context awareness where she customizes decisions based upon which device a consumer is using. For example, let’s say a user instructs the device to “play Hunger Games.” This request is more likely to launch a movie on a smart device with a screen like the Echo Show, whereas if the command is requested by Alexa it’ll most likely launch an audiobook. However, two new features that have recently been announced for Alexa expand her awareness of customer auditory context far beyond just recognition and understanding of words. The sound detection technology lets Alexa Guard recognize smoke alarms and carbon monoxide alarms, whereas the new glass breaking and whisper detection modes allow it to identify these distinctive noises.

One of the systems that enable these improvements for Alexa is called active learning. This is where algorithms sort through all of their training data and dissect out those pertinent examples that are most likely to yield meaningful improvements in accuracy for the future. Alexa researchers have found that active learning can decrease the amount of data needed to train a machine learning system by a whopping 97 percent, allowing a streamline approach to Alexa’s natural language understanding systems. In the past, these types of techniques required data laboriously annotated by hand, but with this new technology, Alexa devices are able to intuitively and intelligently learn from previous interactions.

Another new self-learning techniques that will be deployed in the next few months is called automatic equivalence class learning. This solution addresses the fact that experienced Alexa customers will often rephrase requests that initially fail. For instance, if someone in Seattle is using Alexa to request a radio station such as Sirius XM Chill and it fails, they may rephrase the request to Sirius channel 53. Alexa’s automated systems will recognize that these two requests share the same word “Sirius”, and if the second request is successful, Alexa will learn that both names should be treated as referring to the same entity. This ensures that in the future the user may use both phrases to yield effective results.

Although Amazon and Google are the drivers behind this massive shift towards voice AI, the trend is attracting some new and unlikely companies to cash in. The number of smart devices such as advanced thermostats, appliances, and speakers are giving voice assistants more function in a connected user’s life. Voice interfaces are advancing across not only devices anymore either but even industries like banking and healthcare, as companies race to release their own voice integrations to keep up with the growth in consumer demand. As of right now, smart speakers such as Alexa are still the number one way we are seeing voice AI being used, but it’s only up from here.

More News Posts

Don’t Worry About the New Amazon Inbound FBA Fee

Spoiler alert - The new inbound and FBA fees aren’t…

Amazon and Walmart in Close Competition in Latest Marketplace Polling

In the ever-evolving landscape of retail giants, Amazon, Walmart, and…

Agency vs. Full-Time Staff: Making the Right Choice for Your Business

As businesses grow and scale, the inevitable dilemma arises: do…

Podean’s unBoxed 2023 Recap: Day 1

unBoxed 2023 is officially underway and what an exciting day…

How to Launch a New Agency by Using Fancy Buzzwords

Every now and then, we hear noise in our industry…

Alexa – The Ultimate Trojan Horse?

Amazon typically keeps its numbers close its chest. But exactly…

Amazon or your DNA: What knows you better?

As marketers, we spend a lot of time trying to…

Amazon Explained – The Day 1 Mentality

Amazon CEO Jeff Bezos has always been a strong proponent…

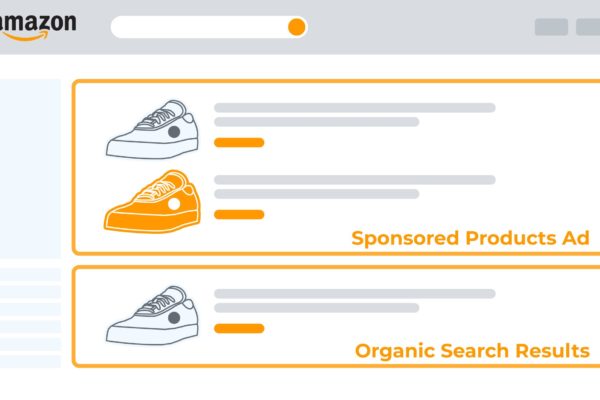

A Beginner’s Guide to Sponsored Products

Podean’s Amazon Explained Series delivers insights into the drivers of…

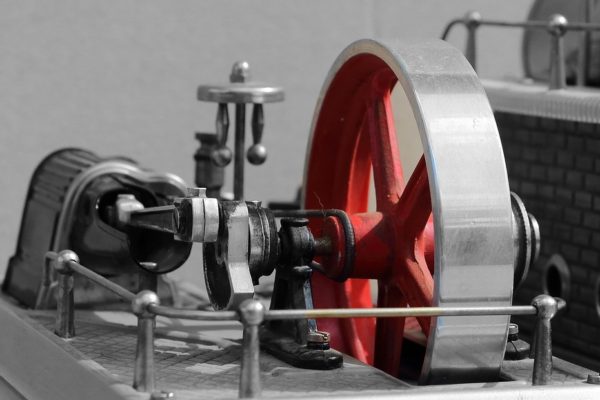

Amazon Explained – The Flywheel

Podean’s Amazon Explained Series delivers insights into the drivers of…

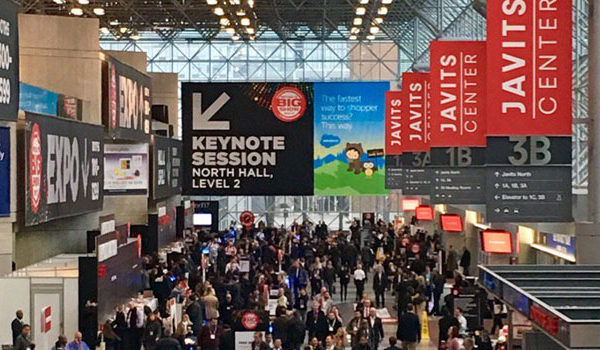

Top 10 Must-Attend Amazon & E-commerce Events

Insights from the Podean Marketplace Intelligence Team It can be…

Amazon Selling 101: Top 20 Tips for Selling on Amazon (1-10)

Insights from the Podean Marketplace Intelligence Team What are some…

Amazon Selling 101: Top 20 Tips for Selling on Amazon (11-20)

Insights from the Podean Marketplace Intelligence Team Most people think…

Amazon Explained: What is the Amazon “Narrative”?

In Jeff Bezos’ letters to shareholders he often mentions how…

About Podean

Latest Posts

Skai Director of Sales joins Podean as Director of Retail Media Partnerships

September 12, 2023